Rethinking LLM Integration: AI Function, a Developer Friendly Abstraction

Large Language Models (LLMs) are redefining the boundaries of what software can accomplish. Beyond generating email or code, LLMs can now be integrated into programs to tackle tasks that were once difficult to solve with purely hard-coded rules. From extracting insights from vast document repositories to analyzing sentiment in customer feedback, or even driving robotic systems, LLMs enable developers to create software with unprecedented cognitive capabilities and adaptability.

Challenges with Current LLM APIs

The current landscape of LLM APIs presents significant challenges for developers seeking to harness these capabilities. While developers need straightforward ways to integrate LLMs into existing systems, they are often confronted with APIs that reflect a different set of priorities.

Developers face several hurdles:

- Structuring outputs becomes unnecessarily complex.

- Navigating low-level LLM details, including the challenge of selecting appropriate models, can be overwhelming and time-consuming.

- The APIs present a steep learning curve, requiring developers to understand a heavyweight, chatbot-based interface that may be overkill for many applications.

Example: Analyzing News Articles

Let's take a closer look at what this means in practice. Imagine you're tasked with creating a function to analyze news articles— a seemingly straightforward application of LLM capabilities. Here's what you might encounter using traditional LLM APIs:

import json

from openai import OpenAI

client = OpenAI()

def analyze_news(article_text):

tools = [

{

"type": "function",

"function": {

"name": "analyze_news_article",

"description": "Analyze a news article by extracting keywords and determining a sentiment score.",

"parameters": {

"type": "object",

"properties": {

"keywords": {

"type": "array",

"items": {"type": "string"},

"description": "A list of keywords extracted from the news article"

},

"sentiment_score": {

"type": "number",

"description": "A sentiment score for the article, ranging from 0 (very negative) to 1 (very positive)"

}

},

"required": ["keywords", "sentiment_score"]

}

}

}

]

messages = [

{

"role": "system",

"content": "Analyze a given news article by extracting keywords and determining a sentiment score between 0 and 1. The sentiment score should reflect the overall emotion and tone of the article, with 0 being very negative and 1 being very positive. Provide both the keywords and the sentiment score as outputs."

},

{"role": "user", "content": article_text}

]

response = client.chat.completions.create(

model="gpt-4o",

messages=messages,

tools=tools,

tool_choice={

"type": "function",

"function": {"name": "analyze_news_article"}

}

)

tool_calls = response.choices[0].message.tool_calls

if tool_calls:

function_args = json.loads(tool_calls[0].function.arguments)

return function_args

else:

return {"error": "No function was called"}

# Example usage

article = """

The global economy showed signs of recovery in the third quarter,

with major stock indices posting significant gains. Experts attribute

this upturn to easing inflation pressures and the Federal Reserve's

decision to hold interest rates steady. However, geopolitical tensions

in Eastern Europe continue to cast a shadow over long-term economic

prospects. Despite these challenges, consumer confidence has reached

its highest level since the pandemic began, indicating a potentially

robust holiday shopping season ahead.

"""

result = analyze_news(article)

print(json.dumps(result, indent=2))

Outputs:

{

"keywords": [

"global economy",

"recovery",

"third quarter",

"stock indices",

"gains",

"inflation",

"Federal Reserve",

"interest rates",

"geopolitical tensions",

"Eastern Europe",

"long-term prospects",

"consumer confidence",

"holiday shopping season"

],

"sentiment_score": 0.75

}

As you can see, what should be a simple task becomes a complex undertaking. But does it have to be this way? What if we could simplify this process dramatically?

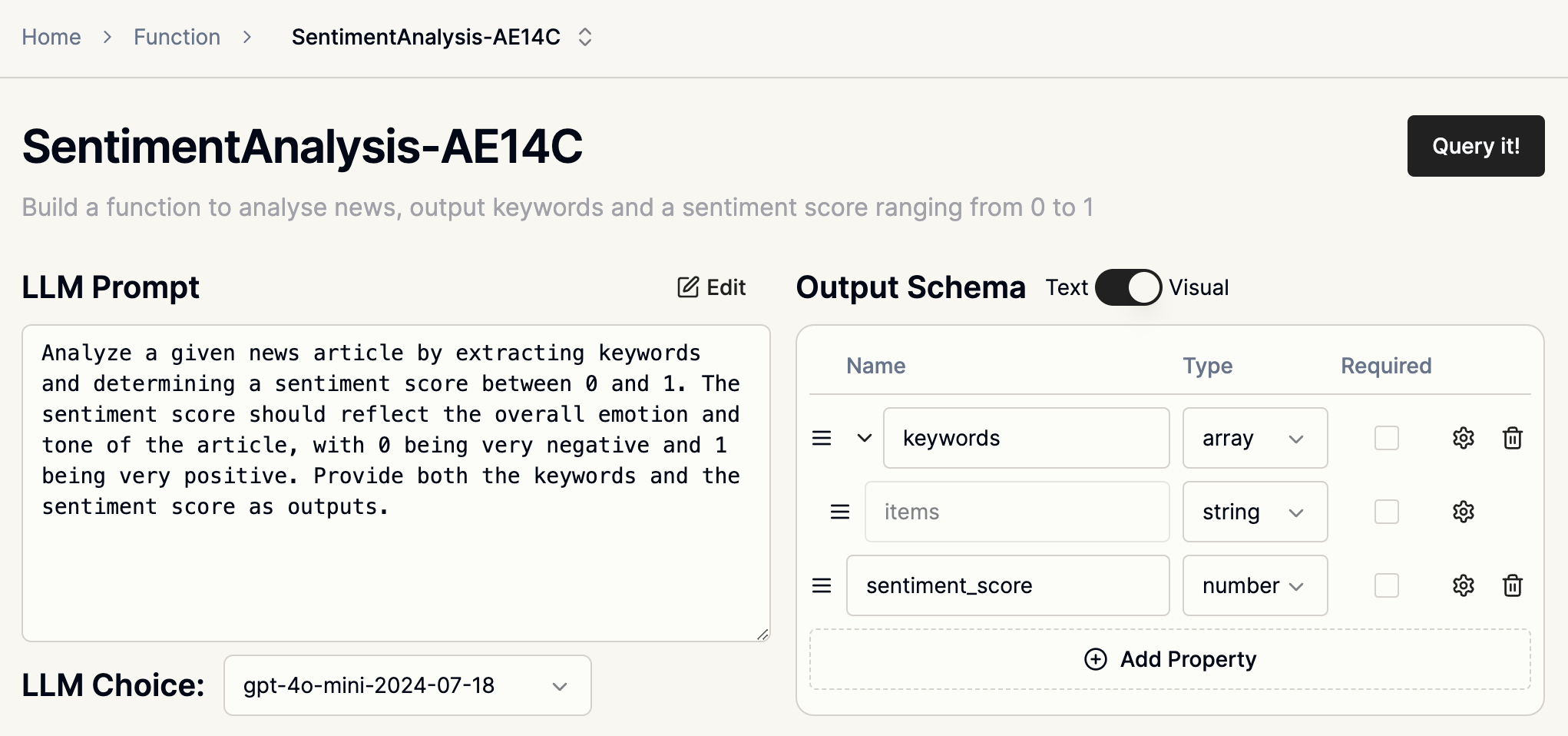

Introducing our AI Function Builder

Our AI Function Builder provides a way to harness the power of LLMs without getting lost in the technical weeds. The tool aims to bridge the gap between complex LLM capabilities and everyday development needs. Let's revisit our news analysis task, this time using AI Function Builder:

- Simply describe your needs in natural language, either through our cloud platform or API: "Build a function to analyze news, output keywords and a sentiment score ranging from 0 to 1"

- Within seconds, you have a specialized endpoint ready for use:

And with the Weco Python client, you can call it as if calling a function:

import weco

article = """

The global economy showed signs of recovery in the third quarter,

with major stock indices posting significant gains. Experts attribute

this upturn to easing inflation pressures and the Federal Reserve's

decision to hold interest rates steady. However, geopolitical tensions

in Eastern Europe continue to cast a shadow over long-term economic

prospects. Despite these challenges, consumer confidence has reached

its highest level since the pandemic began, indicating a potentially

robust holiday shopping season ahead.

"""

# Direct call to the AI function

response = weco.query(

fn_name="SentimentAnalysis-AE14C",

fn_input=article

)

print(result)

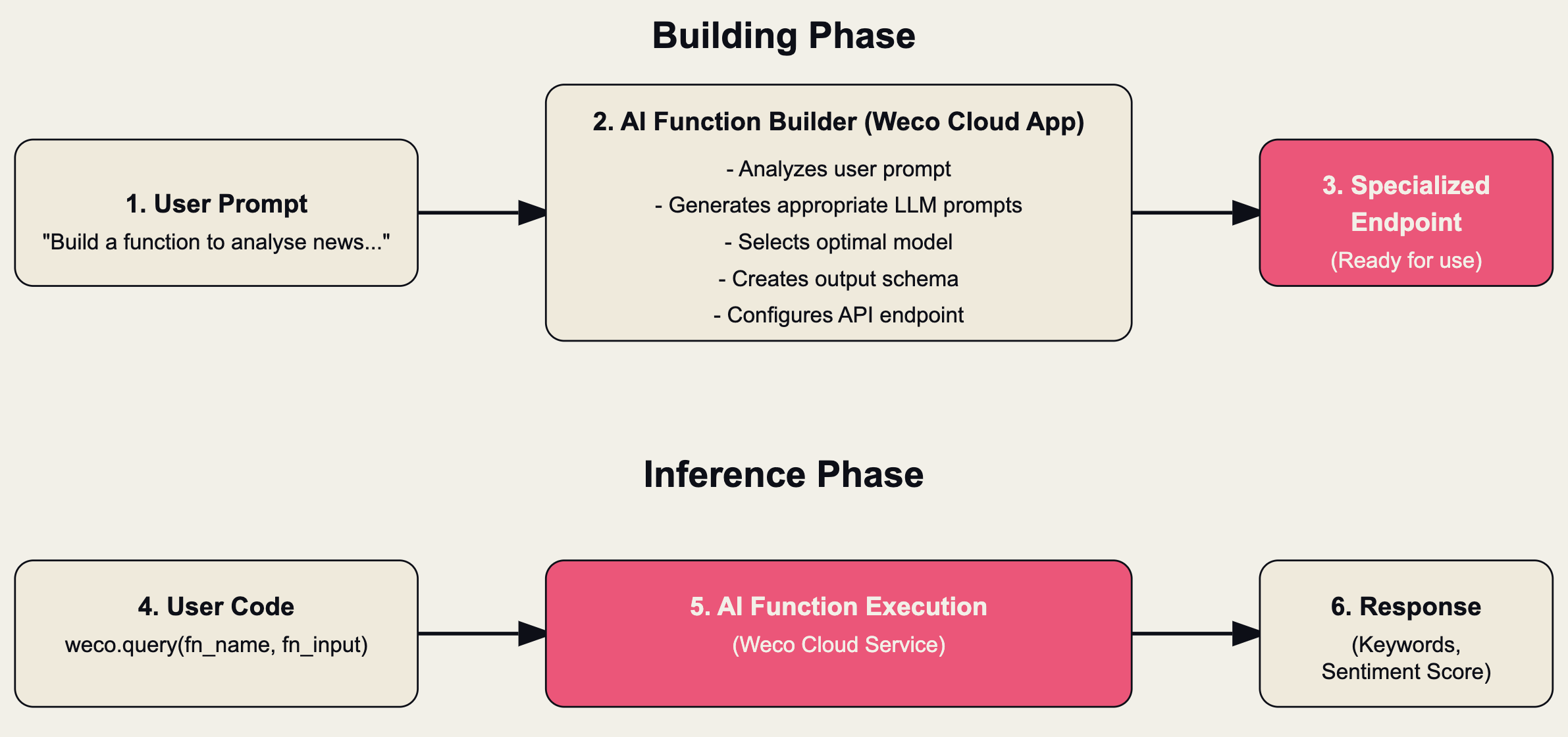

How does it work?

The diagram below shows how AI Function Builder works:

During the building stage, AI Function Builder analyzes your description, generates appropriate LLM prompts, selects an optimal model for your task, creates an output schema, and configures an API endpoint. For inference, Weco loads the generated prompts and uses either guided decoding APIs from LLM providers or Weco's own deployed models. These approaches constrain the LLM to output a structured JSON object that adheres to the previously created schema.

From the user's perspective, you interact with this AI capability as if you're calling a strongly-typed remote function. The API acts as your AI backend, abstracting away the complexities of LLM integration and providing a familiar programming interface.

Create Your First AI Function in Seconds

Try Weco AI's function builder now at aifunction.com.